Building a website analysis system like GA

Posted on: 1/20/2025 3:09:00 PM

Five years ago, a client approached me with a request to build a system that could track and analyze website traffic, similar to Google Analytics. They needed a solution that was easy to customize, highly secure, and capable of performing better than the existing tools available at the time.

After carefully considering the requirements, I decided to create X Tracking - a system designed to collect, process, and analyze user activity data in real time. Using a microservices architecture, we ensured that the system was flexible, scalable, and optimized for high performance. Up to now, the system has been operating stably for 4 years with more than 5000 monitored websites and tens of millions of data records per day. Today, I want to share with you how I implemented this system.

Before designing the system, we outlined the key metrics to collect and their importance:

Session: Represents a single visit by a user. It helps identify how users interact during each visit.

Pageviews: Tracks the number of pages viewed by users, providing insights into user engagement.

Visits: Measures the total number of unique visits, highlighting website traffic trends.

Unique Visits: Tracks distinct users to avoid counting the same individual multiple times, offering a clearer view of audience reach.

Screen type and resolution: Provides information about the types and sizes of devices used, aiding in responsive design optimization.

Referer: Identifies the source of traffic (e.g., search engines, social media, direct links), allowing better marketing strategies.

Time on site: Indicates how long users spend on the website, reflecting user interest and content quality.

Clicks and Interactions: Tracks user behavior and navigation patterns for UI/UX improvements.

Location: Captures geographic data to understand where users are accessing the website from.

Access time: Identifies the most active timeframes, helping optimize content delivery and server performance.

Average page load time: Measures how long pages take to load, crucial for improving user experience and SEO rankings.

Bounce rate: Indicates the percentage of visitors who leave after viewing a single page, highlighting potential engagement issues.

...

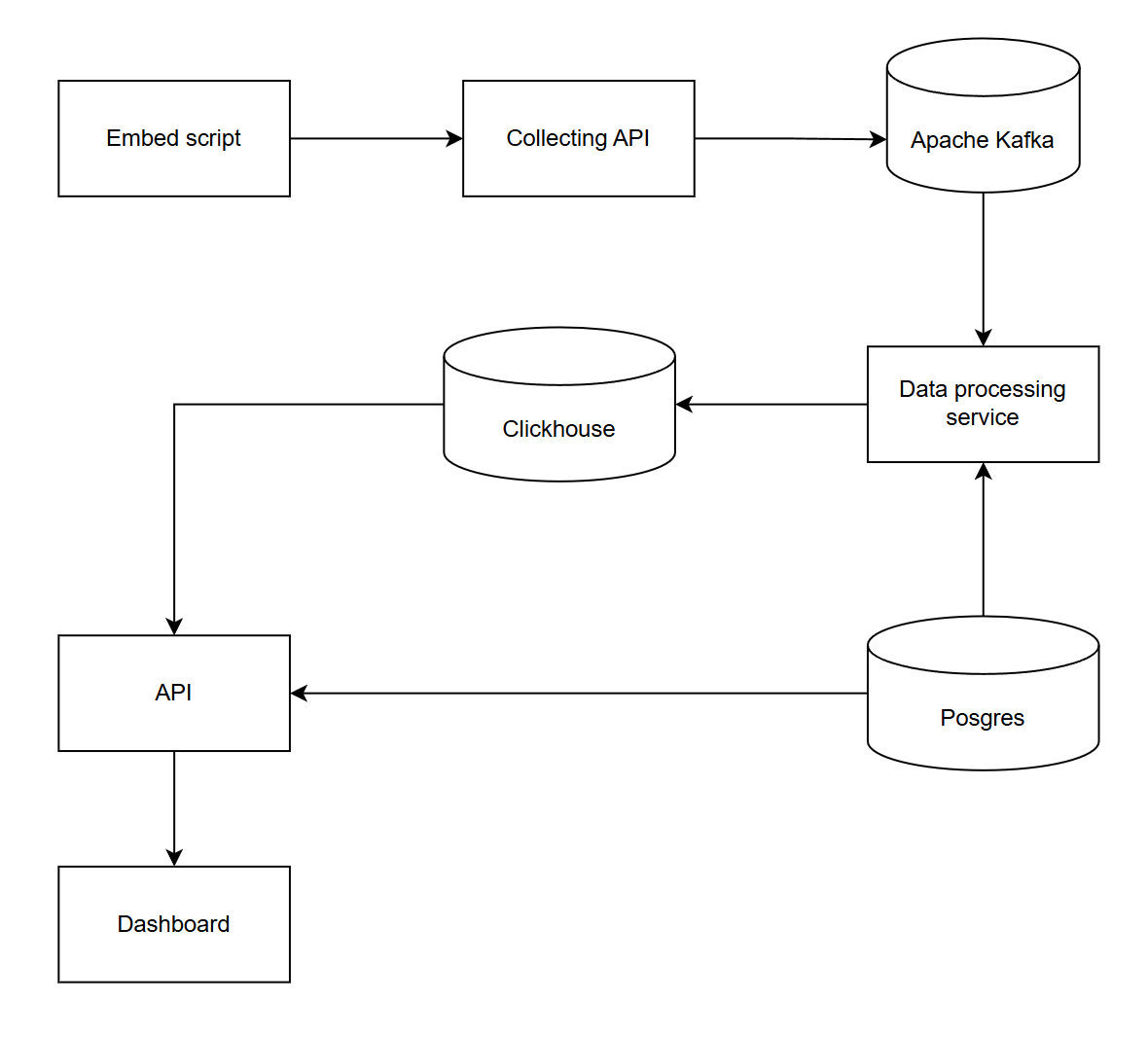

Our system consists of four main components:

Client script

A lightweight JavaScript snippet embedded into the websites being tracked.

Collects information such as session identifiers, clicks, browser details, screen resolution, scroll depth, and interactions.

Sends collected data in JSON format to the API via HTTP.

Data collection API

Receives data sent by the client script.

Ensures secure and reliable data transmission.

Pushes raw data into a message queue (e.g., Apache Kafka) for further processing.

Data processing service

Consumes raw data from the queue.

Processes the data to determine user sessions, map IPs to locations, and organize events into a structured format.

Stores structured data in ClickHouse for fast analytics.

Reporting API and Dashboard

API for analytics and management:

Provides endpoints for retrieving analytics data (e.g., metrics like sessions, pageviews, and bounce rates).

Handles user and page management, role-based access control, and system configuration.

Dashboard UI:

Built with Vue.js for a modern, responsive and user-friendly interface.

Visualizes analytics data with charts, tables, and real-time metrics.

Initially, the system used Elasticsearch as the primary database for analytics. However, over time, as the data volume increased, Elasticsearch struggled to handle queries involving grouping or aggregation efficiently. After extensive research, we transitioned to ClickHouse (an OLAP database), which proved to be a much more suitable choice due to its exceptional query performance and ability to process large-scale data effectively.

- Redis cache: Stores frequently accessed data, such as real-time metrics and intermediate computations, to enhance system responsiveness.

- PostgreSQL: Used to store essential management data, including information about tracked websites, administrators, user roles, and IP-to-location mappings.

This article provides a high-level overview of the architecture without diving into detailed implementation steps. If you have any questions or need further clarification, don’t hesitate to let me know.

Disclaimer: The opinions expressed in this blog are solely my own and do not reflect the views or opinions of my employer or any affiliated organizations. The content provided is for informational and educational purposes only and should not be taken as professional advice. While I strive to provide accurate and up-to-date information, I make no warranties or guarantees about the completeness, reliability, or accuracy of the content. Readers are encouraged to verify the information and seek independent advice as needed. I disclaim any liability for decisions or actions taken based on the content of this blog.